[ad_1]

Can generative AI developed for the business (e.g. AI that autocompletes records, spread sheet solutions and more) ever before be interoperable? In addition to a coterie of companies consisting of Cloudera and Intel, the Linux Structure– the not-for-profit company that sustains and keeps an expanding variety of open resource initiatives– objective to discover.

The Linux Structure today announced the launch of the Open System for Business AI (OPEA), a job to promote the growth of open, multi-provider and composable (i.e. modular) generative AI systems. Under the province of the Linux Structure’s LFAI and Information org, which concentrates on AI- and data-related system efforts, OPEA’s objective will certainly be to lead the way for the launch of “solidified,” “scalable” generative AI systems that “harness the very best open resource development from throughout the environment,” LFAI and Information executive supervisor Ibrahim Haddad claimed in a news release.

” OPEA will certainly open brand-new opportunities in AI by developing a comprehensive, composable structure that stands at the center of modern technology heaps,” Haddad claimed. “This campaign is a testimony to our goal to drive open resource development and partnership within the AI and information areas under a neutral and open administration version.”

In enhancement to Cloudera and Intel, OPEA– among the Linux Structure’s Sandbox Projects, an incubator program of types– matters amongst its participants business heavyweights like Intel, IBM-owned Red Hat, Hugging Face, Domino Information Laboratory, MariaDB and VMWare.

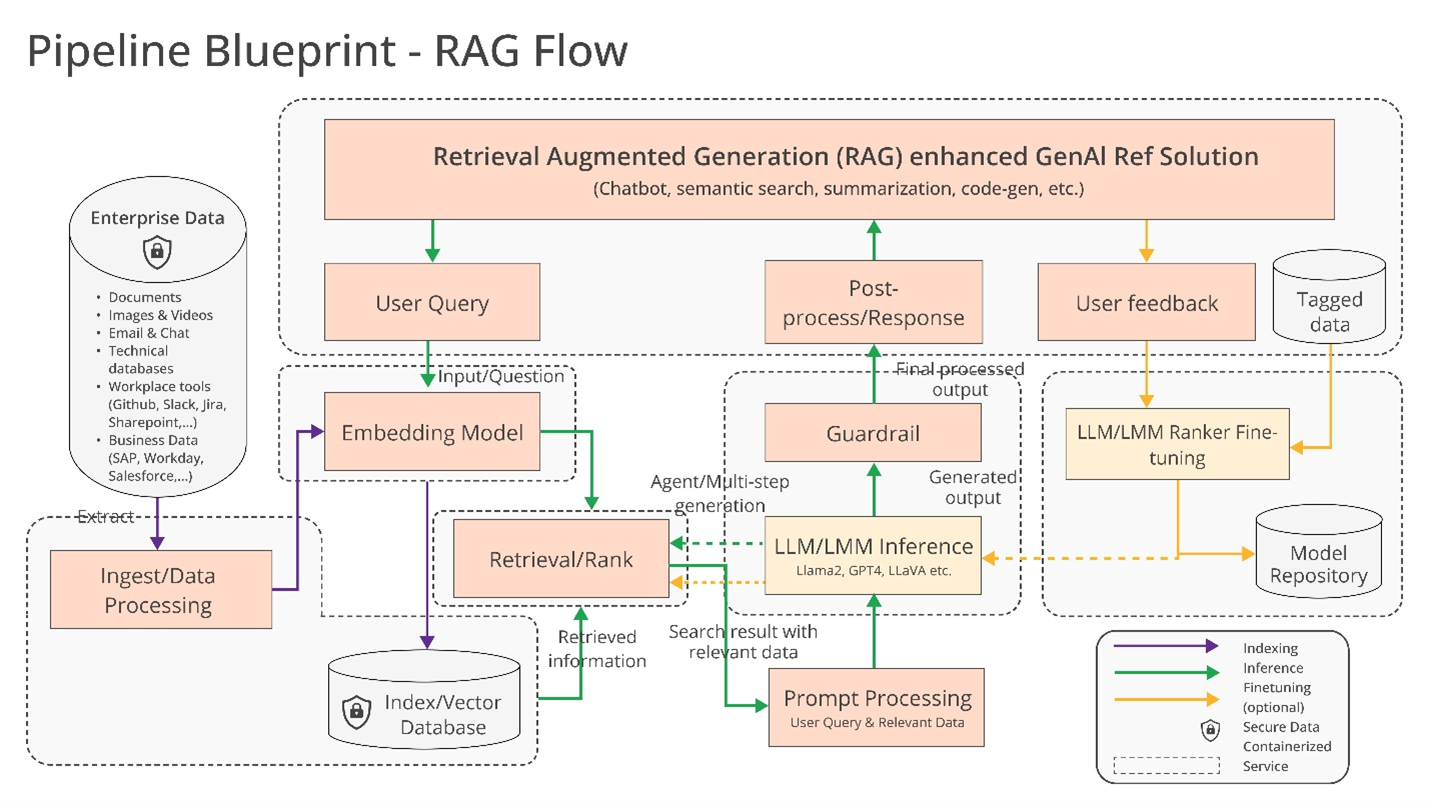

So what might they develop with each other precisely? Haddad mean a couple of opportunities, such as “enhanced” assistance for AI toolchains and compilers, which make it possible for AI work to encounter various equipment parts, along with “heterogeneous” pipes for retrieval-augmented generation (CLOTH).

cloth is coming to be progressively preferred in business applications of generative AI, and it’s simple to see why. A lot of generative AI versions’ solutions and activities are restricted to the information on which they’re educated. Yet with cloth, a version’s data base can be reached information outside the initial training information. Dustcloth versions reference this outdoors information– which can take the type of exclusive business information, a public data source or some mix of both– prior to creating a feedback or doing a job.

A layout describing cloth versions.

Intel used a couple of even more information in its very own press release:

Enterprises are tested with a diy method [to RAG] since there are no de facto requirements throughout parts that permit ventures to pick and release cloth options that are open and interoperable which aid them promptly reach market. OPEA means to resolve these concerns by teaming up with the sector to systematize parts, consisting of structures, design plans and recommendation options.

Analysis will certainly additionally be a vital component of what OPEA takes on.

In its GitHub repository, OPEA recommends a rubric for rating generative AI systems along 4 axes: efficiency, attributes, reliability and “enterprise-grade” preparedness. Performance as OPEA specifies it refers to “black-box” criteria from real-world usage situations. Features is an evaluation of a system’s interoperability, implementation selections and convenience of usage. Dependability takes a look at an AI version’s capacity to assure “toughness” and top quality. And enterprise readiness concentrates on the needs to obtain a system up and running sans significant concerns.

Rachel Roumeliotis, supervisor of open resource method at Intel, says that OPEA will certainly deal with the open resource neighborhood to provide examinations based upon the rubric– and offer evaluations and grading of generative AI implementations on demand.

OPEA’s various other undertakings are a little bit up in the air right now. Yet Haddad drifted the possibility of open version growth along the lines of Meta’s expanding Llama family and Databricks’ DBRX. Towards that end, in the OPEA repo, Intel has actually currently added recommendation executions for an generative-AI-powered chatbot, record summarizer and code generator enhanced for its Xeon 6 and Gaudi 2 equipment.

Currently, OPEA’s participants are really plainly spent (and self-centered, for that issue) in developing tooling for business generative AI. Cloudera just recently launched partnerships to develop what it’s pitching as an “AI environment” in the cloud. Domino uses a suite of apps for structure and bookkeeping business-forward generative AI. And VMWare– oriented towards the framework side of business AI– last August turned out new “private AI” compute products.

The concern is– under OPEA– will these suppliers actually interact to develop cross-compatible AI devices?

There’s an evident advantage to doing so. Clients will gladly make use of numerous suppliers relying on their requirements, sources and spending plans. Yet background has actually revealed that it’s all also simple to come to be likely towards supplier lock-in. Allow’s wish that’s not the best result below.

[ad_2]

Source link .