[ad_1]

OpenAI’s video clip generation device Sora took the AI community by surprise in February with liquid, practical video clip that appears miles in advance of rivals. Yet the thoroughly stage-managed launching omitted a great deal of information– information that have actually been completed by a filmmaker provided very early accessibility to produce a brief making use of Sora.

Shy Children is an electronic manufacturing group based in Toronto that was chosen by OpenAI as one of a couple of to produce short films basically for OpenAI marketing objectives, though they were provided substantial imaginative liberty in creating “air head.” In an interview with visual effects news outlet fxguide, post-production musician Patrick Cederberg defined “really making use of Sora” as component of his job.

Possibly one of the most essential takeaway for many is just this: While OpenAI’s blog post highlighting the shorts allows the viewers presume they basically arised totally created from Sora, the truth is that these were expert manufacturings, total with durable storyboarding, modifying, shade adjustment, and blog post job like rotoscoping and VFX. Equally as Apple claims “fired on apple iphone” yet does not reveal the workshop arrangement, expert lights, and shade job after the reality, the Sora blog post just discusses what it allows individuals do, not just how they really did it.

Cederberg’s meeting is fascinating and rather non-technical, so if you’re interested whatsoever, head over to fxguide and read it. Yet below are some fascinating nuggets regarding making use of Sora that inform us that, as outstanding as it is, the version is possibly much less of a gigantic jump ahead than we believed.

Control is still the important things that is one of the most preferable and likewise one of the most evasive now. … The closest we can obtain was simply being hyper-descriptive in our triggers. Discussing closet for personalities, along with the sort of balloon, was our method around uniformity since shot to shot/ generation to generation, there isn’t the attribute embeded in location yet for complete control over uniformity.

To put it simply, issues that are easy in standard filmmaking, like selecting the shade of a personality’s apparel, take intricate workarounds and sign in a generative system, since each shot is produced independent of the others. That can undoubtedly alter, yet it is absolutely a lot more tiresome right now.

Sora outcomes needed to be looked for undesirable components also: Cederberg defined just how the version would regularly create a face on the balloon that the major personality has for a head, or a string suspending the front. These needed to be gotten rid of in blog post, one more lengthy procedure, if they could not obtain the punctual to omit them.

Exact timing and activities of personalities or the video camera aren’t actually feasible: “There’s a bit of temporal control regarding where these various activities occur in the real generation, yet it’s not specific … it’s type of a shot in the dark,” stated Cederberg.

For instance, timing a motion like a wave is a really approximate, suggestion-driven procedure, unlike hands-on computer animations. And a shot like a frying pan upwards on the personality’s body might or might not mirror what the filmmaker desires– so the group in this situation provided a shot made up in picture alignment and did a plant frying pan in blog post. The produced clips were likewise usually in slow-moving movement for no specific factor.

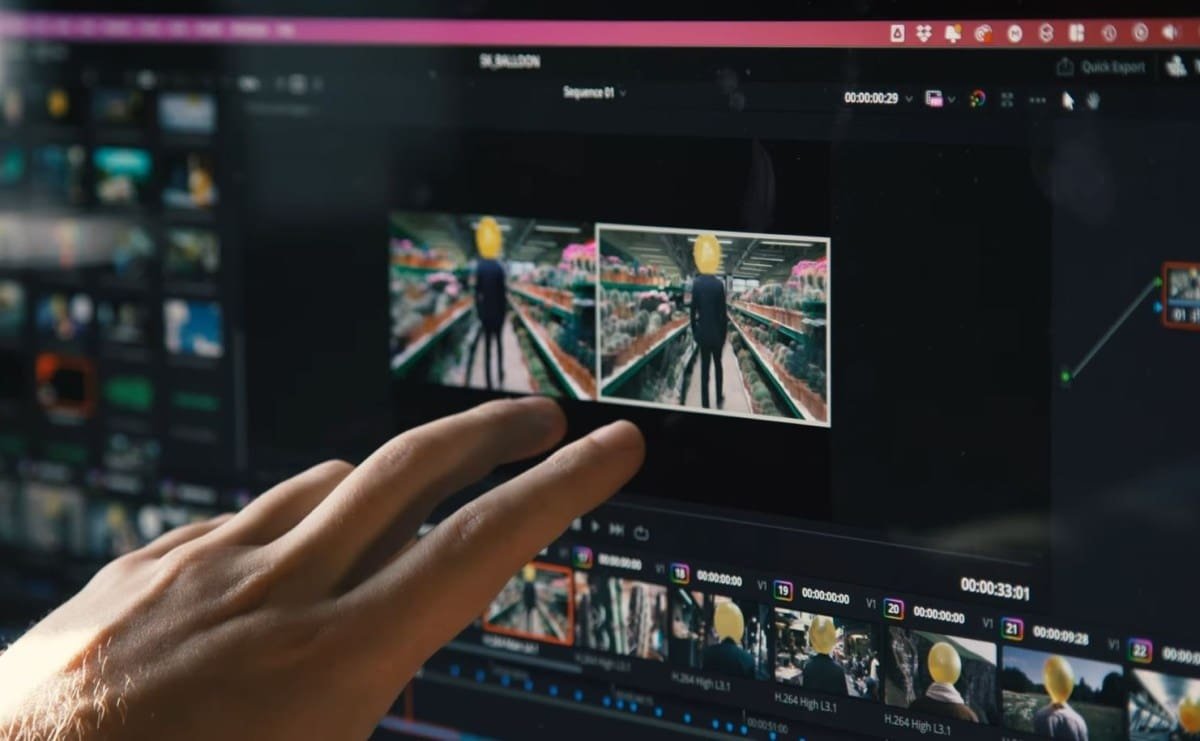

Instance of a shot as it appeared of Sora and just how it wound up in the brief. Image Debts: Reluctant Kids

In reality, making use of the daily language of filmmaking, like “panning right” or “tracking shot” were irregular generally, Cederberg stated, which the group located rather unusual.

” The scientists, prior to they came close to musicians to have fun with the device, had not actually been assuming like filmmakers,” he stated.

Consequently, the group did numerous generations, each 10 to 20 secs, and wound up making use of just a handful. Cederberg approximated the proportion at 300:1– yet certainly we would most likely all be shocked at the proportion on an average shoot.

The group really did a little behind-the-scenes video describing a few of the problems they faced, if you wonder. Like a great deal of AI-adjacent material, the comments are pretty critical of the whole endeavor— though not rather as vituperative as the AI-assisted ad we saw pilloried recently.

The last fascinating crease relate to copyright: If you ask Sora to offer you a “Celebrity Wars” clip, it will certainly decline. And if you attempt to navigate it with “robed male with a laser sword on a retro-futuristic spacecraf,” it will certainly likewise decline, as by some system it identifies what you’re attempting to do. It likewise rejected to do an “Aronofsky kind shot” or a “Hitchcock zoom.”

On one hand, it makes ideal feeling. Yet it does trigger the inquiry: If Sora recognizes what these are, does that suggest the version was educated on that particular material, the much better to identify that it is infringing? OpenAI, which maintains its training information cards near the vest– to the factor of absurdity, just like CTO Mira Murati’s interview with Joanna Stern— will certainly likely never ever inform us.

When it comes to Sora and its usage in filmmaking, it’s plainly an effective and valuable device in its location, yet its location is not “producing movies out of entire fabric.” Yet. As one more bad guy as soon as notoriously stated, “that comes later on.”

[ad_2]

Source link .