[ad_1]

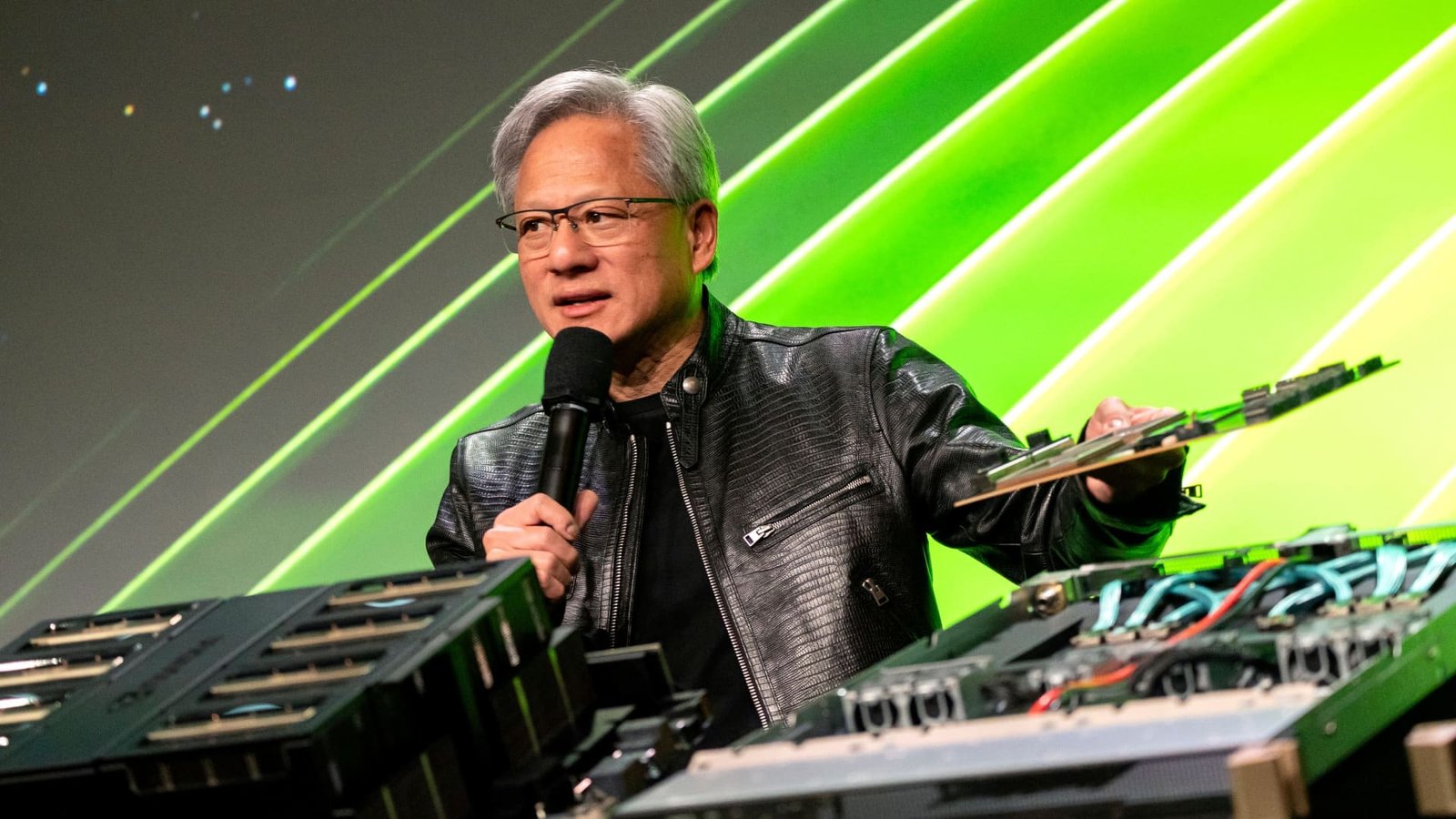

Jensen Huang, founder and ceo of Nvidia Corp., throughout the Nvidia GPU Innovation Meeting (GTC) in San Jose, The Golden State, United States, on Tuesday, March 19, 2024. Â

David Paul Morris|Bloomberg|Getty Images

Nvidia’s 27% rally in Might pressed its market cap to $2.7 trillion, behind just Microsoft and Apple amongst the most-valuable public business worldwide. The chipmaker reported a tripling in year-over-year sales for the 3rd straight quarter driven by rising need for its expert system cpus.

Mizuho Stocks approximates that Nvidia regulates in between 70% and 95% of the marketplace for AI chips made use of for training and releasing designs like OpenAI’s GPT. Emphasizing Nvidia’s rates power is a 78% gross margin, an amazingly high number for an equipment business that needs to make and deliver physical items.

Competing chipmakers Intel and Advanced Micro Devices reported gross margins in the most recent quarter of 41% and 47%, specifically.

Nvidia’s placement in the AI chip market has actually been called a moat by some professionals. Its front runner AI graphics refining systems (GPUs), such as the H100, combined with the business’s CUDA software program caused such a running start on the competitors that switching over to an option can appear virtually unimaginable.

Still, Nvidia Chief Executive Officer Jensen Huang, whose total assets has actually swelled from $3 billion to around $90 billion in the previous 5 years, has actually claimed he’s “stressed and worried” regarding his 31-year-old business shedding its side. He recognized at a seminar late in 2015 that there are lots of effective rivals increasing.

” I do not believe individuals are attempting to place me closed,” Huang said in November. “I possibly understand they’re attempting to, to make sure that’s various.”

Nvidia has actually devoted to launching a new AI chip architecture every year, as opposed to every various other year as held true traditionally, and to producing brand-new software program that can much more deeply set its contribute AI software program.

Yet Nvidia’s GPU isn’t alone in having the ability to run the complicated mathematics that underpins generative AI. If much less effective chips can do the very same job, Huang could be justifiably paranoid.

The change from training AI designs to what’s called reasoning â $ ” or releasing the designs â $ ” can additionally offer business a possibility to change Nvidia’s GPUs, specifically if they’re less costly to acquire and run. Nvidia’s front runner chip prices approximately $30,000 or even more, offering clients a lot of motivation to look for choices.

” Nvidia would certainly enjoy to have 100% of it, yet clients would certainly not enjoy for Nvidia to have 100% of it,” claimed Sid Sheth, founder of striving competing D-Matrix. “It’s simply also huge of a possibility. It would certainly be also harmful if any kind of one business took all of it.”

Founded in 2019, D-Matrix strategies to launch a semiconductor card for web servers later on this year that intends to decrease the price and latency of running AI designs. The business raised $110 million in September.

Along with D-Matrix, business varying from international firms to inceptive start-ups are defending a piece of the AI chip market that can get to $400 billion in yearly sales in the following 5 years, according to market experts and AMD. Nvidia has actually created regarding $80 billion in profits over the previous 4 quarters, and Financial institution of America approximates the business offered $34.5 billion in AI chips in 2015.

Lots of business tackling Nvidia’s GPUs are wagering that a various design or particular compromises can generate a much better chip for specific jobs. Tool manufacturers are additionally establishing modern technology that can wind up doing a great deal of the computer for AI that’s presently happening in huge GPU-based collections in the cloud.

” No one can refute that today Nvidia is the equipment you intend to educate and run AI designs,” Fernando Vidal, founder of 3Fourteen Research study, told CNBC “Yet there’s been step-by-step progression in leveling the having fun area, from hyperscalers working with their very own chips, to also little start-ups, developing their very own silicon.”

AMD chief executive officer Lisa Su desires financiers to think there’s a lot of space for lots of effective business in the room.

“The secret is that there are a great deal of choices there,” Su informed press reporters in December, when her business introduced its latest AI chip. “I believe we’re visiting a scenario where there’s not just one option, there will certainly be several options.”

Other huge chipmakers

Lisa Su presents an AMD Impulse MI300 chip as she provides a keynote address at CES 2023 in Las Las Vega, Nevada, on Jan. 4, 2023.

David Becker|Getty Images

AMD makes GPUs for pc gaming and, like Nvidia, is adjusting them for AI within information facilities. Its front runner chip is the Impulse MI300X. Microsoft has actually currently purchased AMD cpus, supplying accessibility to them via its Azure cloud.

At launch, Su highlighted the chip’s quality at reasoning, rather than taking on Nvidia for training. Recently, Microsoft claimed it was utilizing AMD Impulse GPUs to offer its Copilot designs. Morgan Stanley experts took the information as an indicator that AMD’s AI chip sales can exceed $4 billion this year, the business’s public target.

Intel, which was exceeded by Nvidia in 2015 in regards to profits, is additionally attempting to develop an existence in AI. The business lately revealed the 3rd variation of its AI accelerator, Gaudi 3. This moment Intel contrasted it straight to the competitors, explaining it as a much more affordable choice and much better than Nvidia’s H100 in regards to running reasoning, while much faster at training designs.

Financial institution of America experts approximated lately that Intel will certainly have much less than 1% of the AI chip market this year. Intel states it has a $2 billion order of stockpiles for the chip.

The primary obstacle to wider fostering might be software program. AMD and Intel are both joining a large sector team called the UXL foundation, that includes Google, that’s functioning to produce complimentary choices to Nvidia’s CUDA for managing equipment for AI applications.

Nvidia’s leading customers

One possibility obstacle for Nvidia is that it’s contending versus several of its greatest clients. Cloud suppliers consisting of Google, Microsoft and Amazon are all building processors for internal use. The Big Tech three, plus Oracle, make up over 40% of Nvidia’s revenue.

Amazon introduced its own AI-oriented chips in 2018, under the Inferentia brand name. Inferentia is now on its second version. In 2021, Amazon Web Services debuted Tranium targeted to training. Customers can’t buy the chips but they can rent systems through AWS, which markets the chips as more cost efficient than Nvidia’s.

Google is perhaps the cloud provider most committed to its own silicon. The company has been using what it calls Tensor Processing Units (TPUs) since 2015 to train and deploy AI models. In May, Google announced the sixth version of its chip, Trillium, which the company said was used to develop its models, including Gemini and Imagen.

Google also uses Nvidia chips and offers them through its cloud.

Microsoft isn’t as far along. The company said last year that it was developing its very own AI accelerator and cpu, called Maia and Cobalt.

Meta isn’t a cloud service provider, yet the business requires huge quantities of calculating power to run its software program and internet site and to offer advertisements. While the Facebook moms and dad business is getting billions of bucks well worth of Nvidia cpus, it claimed in April that several of its domestic chips were currently in information facilities and made it possible for “higher performance” contrasted to GPUs.

JPMorgan experts approximated in Might that the marketplace for developing custom-made chips for huge cloud suppliers can be worth as high as $30 billion, with possible development of 20% annually.

Startups

Cerebras’ WSE-3 chip is one instance of brand-new silicon from startups developed to run and educate expert system.

Cerebras Systems

Venture plutocrats see chances for arising business to delve into the video game. They spent $6 billion in AI semiconductor business in 2023, up a little from $5.7 billion a year previously, according to information from PitchBook.

It’s a difficult location for start-ups as semiconductors are pricey to style, create and make. Yet there are chances for distinction.

For Cerebras Equipments, an AI chipmaker in Silicon Valley, the emphasis gets on standard procedures and traffic jams for AI, versus the much more basic function nature of a GPU. The business was established in 2015 and was valued at $4 billion throughout its latest fundraising, according to Bloomberg.

The Cerebras chip, WSE-2, places GPU capacities along with main handling and extra memory right into a solitary tool, which is much better for training huge designs, claimed chief executive officer Andrew Feldman.

“We utilize a huge chip, they utilize a great deal of little chips,” Feldman claimed. “They have actually obtained obstacles of relocating information about, we do not.”

Feldman claimed his business, which counts Mayo Facility, GlaxoSmithKline, and the U.S. Military as clients, is winning business for its supercomputing systems even going up against Nvidia.

“There’s ample competition and I think that’s healthy for the ecosystem,” Feldman said.

Sheth from D-Matrix said his company plans to release a card with its chiplet later this year that will allow for more computation in memory, as opposed to on a chip like a GPU. D-Matrix’s product can be slotted into an AI server along existing GPUs, but it takes work off of Nvidia chips, and helps to lower the cost of generative AI.

Customers “are very receptive and very incentivized to enable a new solution to come to market,” Sheth said.

Apple and Qualcomm

Apple iPhone 15 series devices are displayed for sale at The Grove Apple retail store on release day in Los Angeles, California, on September 22, 2023.Â

Patrick T. Fallon | Afp | Getty Images

The biggest threat to Nvidia’s data center business may be a change in where processing happens.

Developers are increasingly betting that AI work will move from server farms to the laptops, PCs and phones we own.

Big models like the ones developed by OpenAI require massive clusters of powerful GPUs for inference, but companies like Apple and Microsoft are developing “small models” that require less power and data and can run on a battery-powered device. They may not be as skilled as the latest version of ChatGPT, but there are other applications they perform, such as summarizing text or visual search.

Apple and Qualcomm are updating their chips to run AI more efficiently, adding specialized sections for AI models called neural processors, which can have privacy and speed advantages.

Qualcomm recently announced a PC chip that will allow laptops to run Microsoft AI services on the device. The company has also invested in a number of chipmakers making lower-power processors to run AI algorithms outside of a smartphone or laptop.

Apple has been marketing its latest laptops and tablets as optimized for AI because of the neural engine on its chips. At its upcoming developer conference, Apple is planning to show off a slew of new AI features, likely running on the company’s iPhone-powering silicon.

[ad_2]

Source link .