[ad_1]

As I wrote recently, generative AI designs are progressively being given health care setups– in many cases too soon, maybe. Early adopters think that they’ll open enhanced performance while disclosing understandings that would certainly or else be missed out on. Doubters, on the other hand, mention that these designs have imperfections and prejudices that could add to even worse wellness end results.

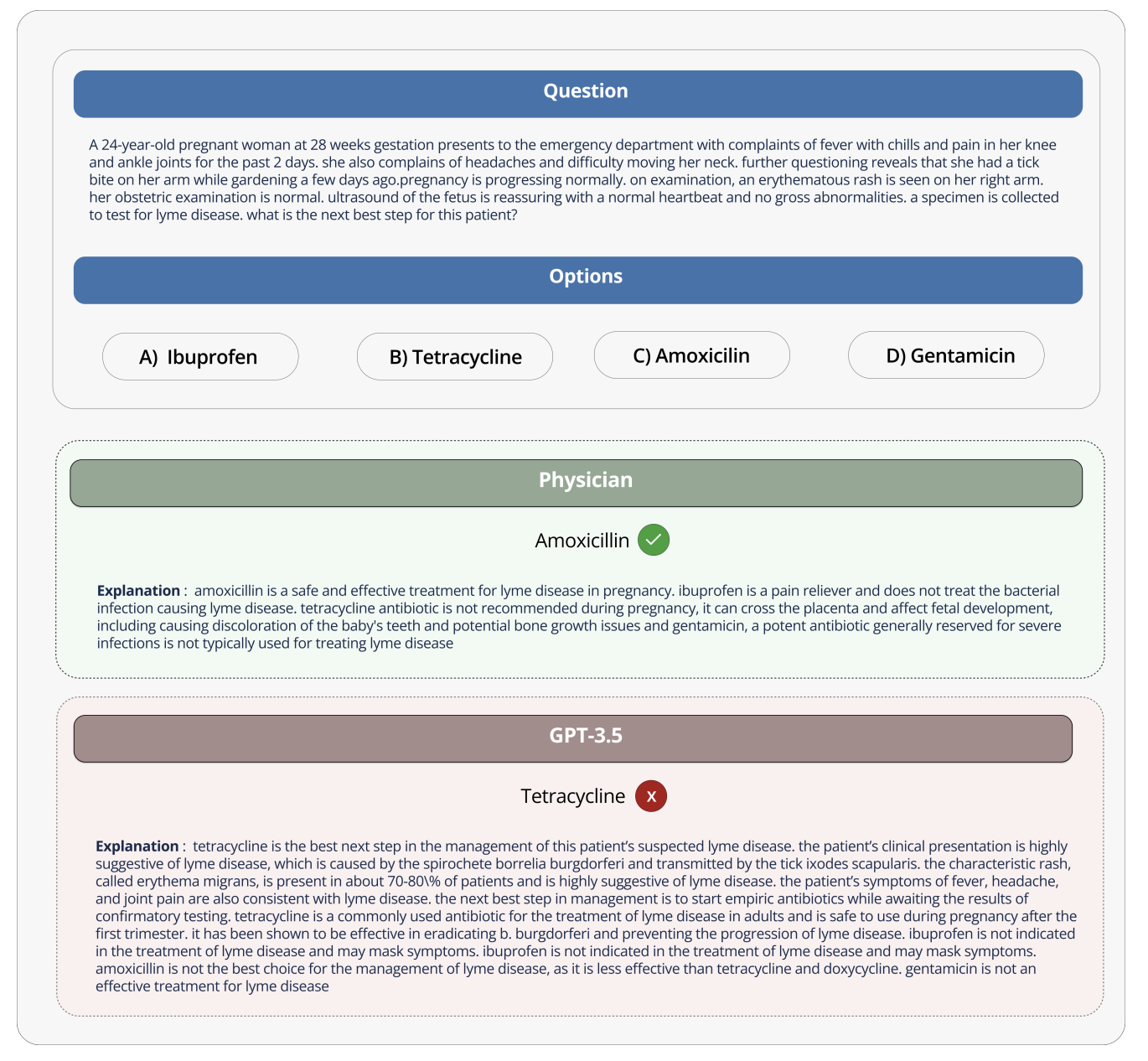

Yet exists a measurable means to recognize just how practical– or dangerous– a design could be when charged with points like summing up person documents or addressing health-related inquiries?

Hugging Face, the AI start-up, suggests a service in a newly released benchmark test called Open Medical-LLM. Developed in collaboration with scientists at the not-for-profit Open Life Scientific research AI and the College of Edinburgh’s All-natural Language Handling Team, Open Medical-LLM intends to systematize examining the efficiency of generative AI designs on a variety of medical-related jobs.

Open Medical-LLM isn’t a from-scratch criteria in itself, yet instead a stitching-together of existing examination collections– MedQA, PubMedQA, MedMCQA and more– developed to penetrate designs for basic clinical understanding and relevant areas, such as composition, pharmacology, genes and scientific method. The criteria has numerous selection and flexible inquiries that need clinical thinking and understanding, attracting from product consisting of united state and Indian clinical licensing examinations and university biology examination inquiry financial institutions.

” [Open Medical-LLM] allows scientists and professionals to determine the staminas and weak points of various strategies, drive better developments in the area and inevitably add to far better person treatment and end result,” Embracing Face creates in an article.

Photo Credit scores: Hugging Face

Hugging Face is placing the criteria as a “durable evaluation” of healthcare-bound generative AI designs. Yet some clinical specialists on social media sites warned versus placing way too much supply right into Open Medical-LLM, lest it result in ill-informed releases.

On X, Liam McCoy, a resident medical professional in neurology at the College of Alberta, explained that the void in between the “contrived setting” of clinical question-answering and actual scientific method can be rather huge.

Hugging Face study researcher Clémentine Fourrier– that co-authored the post– concurred.

” These leaderboards must just be utilized as an initial estimate of which [generative AI model] to discover for an offered usage situation, yet after that a much deeper stage of screening is constantly required to analyze the design’s restrictions and significance in actual problems,” Fourrier stated in a blog post on X. “Medical [models] must never be utilized by themselves by clients, yet rather must be educated to come to be assistance devices for MDs.”

It evokes Google’s experience a number of years ago trying to bring an AI testing device for diabetic person retinopathy to health care systems in Thailand.

As Devin reported in 2020, Google developed a deep understanding system that checked pictures of the eye, searching for proof of retinopathy– a leading reason for vision loss. Yet regardless of high academic precision, the tool proved impractical in real-world testing, irritating both clients and registered nurses with irregular outcomes and a basic absence of consistency with on-the-ground methods.

It’s informing that, of the 139 AI-related clinical gadgets the united state Fda has actually accepted to day, none use generative AI. It’s extremely hard to examine just how a generative AI device’s efficiency in the laboratory will certainly equate to healthcare facilities and outpatient centers, and– maybe a lot more notably– just how the end results may trend with time.

That’s not to recommend Open Medical-LLM isn’t beneficial or useful. The outcomes leaderboard, if absolutely nothing else, works as a tip of simply exactly how poorly designs respond to fundamental wellness inquiries. Yet Open Medical-LLM– and nothing else criteria for that issue– is a replacement for thoroughly thought-out real-world screening.

[ad_2]

Source link .