[ad_1]

Keeping up with a market as fast-moving as AI is an uphill struggle. So up until an AI can do it for you, below’s a convenient summary of current tales on the planet of artificial intelligence, together with noteworthy research study and experiments we really did not cover by themselves.

Today, Meta launched the latest in its Llama series of generative AI models: Llama 3 8B and Llama 3 70B. With the ability of examining and composing message, the versions are “open sourced,” Meta stated– planned to be a “fundamental item” of systems that designers layout with their distinct objectives in mind.

” Our team believe these are the most effective open resource versions of their course, duration,” Meta composed in a blog post. “We are accepting the open resource values of launching very early and frequently.”

There’s just one issue: the Llama 3 versions aren’t really “open resource,” a minimum of not in the strictest definition.

Open resource suggests that designers can utilize the versions just how they select, unconfined. Yet when it comes to Llama 3– similar to Llama 2– Meta has actually enforced particular licensing constraints. As an example, Llama versions can not be utilized to educate various other versions. And app designers with over 700 million regular monthly customers need to ask for an unique certificate from Meta.

Debates over the interpretation of open resource aren’t brand-new. Yet as firms in the AI room play reckless with the term, it’s infusing gas right into long-running thoughtful disagreements.

Last August, a study co-authored by scientists at Carnegie Mellon, the AI Now Institute and the Signal Structure located that several AI versions branded as “open resource” featured large catches– not simply Llama. The information called for to educate the versions is concealed. The calculate power required to run them is past the reach of several designers. And the labor to tweak them is much too costly.

So if these versions aren’t genuinely open resource, what are they, precisely? That’s an excellent inquiry; specifying open resource relative to AI isn’t a simple job.

One significant unsettled inquiry is whether copyright, the fundamental IP system open resource licensing is based upon, can be related to the numerous elements and items of an AI job, particularly a version’s internal scaffolding (e.g. embeddings). After that, there’s the inequality in between the understanding of open resource and just how AI in fact works to get rid of: open resource was created partly to make sure that designers can research and customize code without constraints. With AI, however, which components you require to do the researching and customizing is open to analysis.

Learning all the unpredictability, the Carnegie Mellon research does explain the injury fundamental in technology titans like Meta co-opting the expression “open resource.”

Often, “open resource” AI tasks like Llama wind up starting information cycles– totally free advertising– and offering technological and critical advantages to the tasks’ maintainers. The open resource area hardly ever sees these very same advantages, and, when they do, they’re minimal contrasted to the maintainers’.

Rather than equalizing AI, “open resource” AI tasks– particularly those from large technology firms– have a tendency to set and broaden central power, claim the research’s co-authors. That’s excellent to bear in mind the following time a significant “open resource” design launch occurs.

Right here are a few other AI tales of note from the previous couple of days:

- Meta updates its chatbot: Accompanying the Llama 3 launching, Meta updated its AI chatbot throughout Facebook, Carrier, Instagram and WhatsApp– Meta AI– with a Llama 3-powered backend. It additionally introduced brand-new functions, consisting of quicker picture generation and accessibility to internet search engine result.

- AI-generated porn: Ivan blogs about just how the Oversight Board, Meta’s semi-independent plan council, is transforming its interest to just how the firm’s social systems are managing specific, AI-generated photos.

- Snap watermarks: Social network solution Break strategies to include watermarks to AI-generated photos on its system. A transparent variation of the Break logo design with a glimmer emoji, the brand-new watermark will certainly be included in any kind of AI-generated picture exported from the application or conserved to the cam roll.

- The new Atlas: Hyundai-owned robotics firm Boston Characteristics has actually revealed its next-generation humanoid Atlas robotic, which, unlike its hydraulics-powered precursor, is all-electric– and much friendlier in look.

- Humanoids on humanoids: Not to be outshined by Boston Characteristics, the owner of Mobileye, Amnon Shashua, has actually introduced a brand-new start-up, Menteebot, concentrated on structure bibedal robotics systems. A demonstration video clip reveals Menteebot’s model strolling over to a table and grabbing fruit.

- Reddit, translated: In a meeting with Amanda, Reddit CPO Pali Bhat exposed that an AI-powered language translation attribute to bring the social media to an extra international target market remains in the jobs, together with an assistive small amounts device educated on Reddit mediators’ previous choices and activities.

- AI-generated LinkedIn content: LinkedIn has actually silently begun evaluating a brand-new method to improve its incomes: a LinkedIn Costs Business Web page registration, which– for costs that seem as high as $99/month– consist of AI to create material and a collection of devices to expand fan matters.

- A Bellwether: Google moms and dad Alphabet’s moonshot manufacturing facility, X, today revealed Task Bellwether, its most current quote to use technology to several of the globe’s greatest troubles. Right here, that implies utilizing AI devices to recognize all-natural catastrophes like wildfires and flooding as promptly as feasible.

- Protecting kids with AI: Ofcom, the regulatory authority billed with implementing the U.K.’s Online Safety and security Act, prepares to introduce an expedition right into just how AI and various other computerized devices can be utilized to proactively spot and get rid of prohibited material online, particularly to protect youngsters from hazardous material.

- OpenAI lands in Japan: OpenAI is increasing to Japan, with the opening of a brand-new Tokyo workplace and prepare for a GPT-4 design maximized particularly for the Japanese language.

Even more device learnings

Image Credit scores: DrAfter123/ Getty Images

Can a chatbot adjustment your mind? Swiss scientists located that not just can they, yet if they are pre-armed with some individual details regarding you, they can actually be more persuasive in a debate than a human with that same info.

” This is Cambridge Analytica on steroids,” stated job lead Robert West from EPFL. The scientists presume the design– GPT-4 in this instance– attracted from its substantial shops of disagreements and realities online to provide an extra engaging and certain instance. Yet the result type of represents itself. Do not ignore the power of LLMs in issues of persuasion, West cautioned: “In the context of the upcoming United States political elections, individuals are worried since that’s where this type of modern technology is constantly initial fight evaluated. Something we understand for certain is that individuals will certainly be utilizing the power of huge language versions to attempt to turn the political election.”

Why are these versions so proficient at language anyhow? That’s one location there is a lengthy background of research study right into, returning to ELIZA. If you wonder regarding among individuals that’s been there for a great deal of it (and executed no percentage of it himself), look into this profile on Stanford’s Christopher Manning. He was simply granted the John von Neuman Medal; congrats!

In a provocatively labelled meeting, an additional lasting AI scientist (that has graced the TechCrunch stage also), Stuart Russell, and postdoc Michael Cohen hypothesize on “How to keep AI from killing us all.” Most likely a good idea to identify earlier instead of later on! It’s not a surface conversation, however– these are wise individuals discussing just how we can in fact recognize the inspirations (if that’s the appropriate word) of AI versions and just how laws should be developed around them.

The meeting is in fact relating to a paper in Science released previously this month, in which they suggest that sophisticated AIs with the ability of acting purposefully to attain their objectives, which they call “lasting preparation representatives,” might be difficult to examination. Basically, if a version discovers to “recognize” the screening it need to come on order to do well, it might quite possibly find out means to artistically negate or prevent that screening. We’ve seen it at a tiny range, why not a huge one?

Russell recommends limiting the equipment required to make such representatives … yet naturally, Los Alamos and Sandia National Labs simply obtained their shipments. LANL just had the ribbon-cutting ceremony for Venado, a brand-new supercomputer planned for AI research study, made up of 2,560 Elegance Receptacle Nvidia chips.

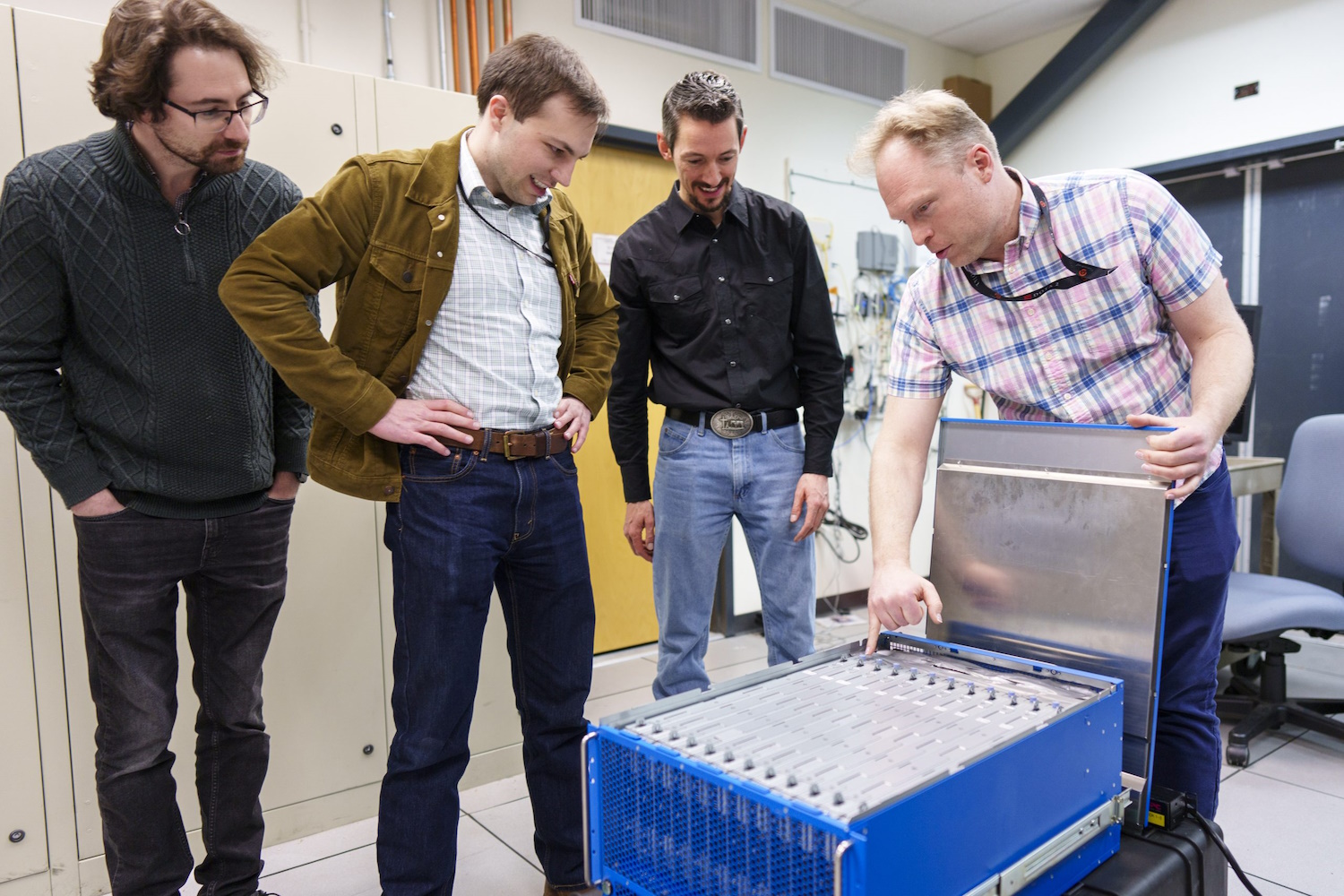

Scientists consider the brand-new neuromorphic computer system.

And Sandia simply got “a remarkable brain-based computer system called Hala Factor,” with 1.15 billion fabricated nerve cells, developed by Intel and thought to be the biggest such system on the planet. Neuromorphic computer, as it’s called, isn’t planned to change systems like Venado, yet to go after brand-new approaches of calculation that are extra brain-like than the instead statistics-focused strategy we see in modern-day versions.

” With this billion-neuron system, we will certainly have a possibility to introduce at range both brand-new AI formulas that might be extra effective and smarter than existing formulas, and brand-new brain-like methods to existing computer system formulas such as optimization and modeling,” stated Sandia scientist Brad Aimone. Appears dandy … simply dandy!

[ad_2]

Source link .